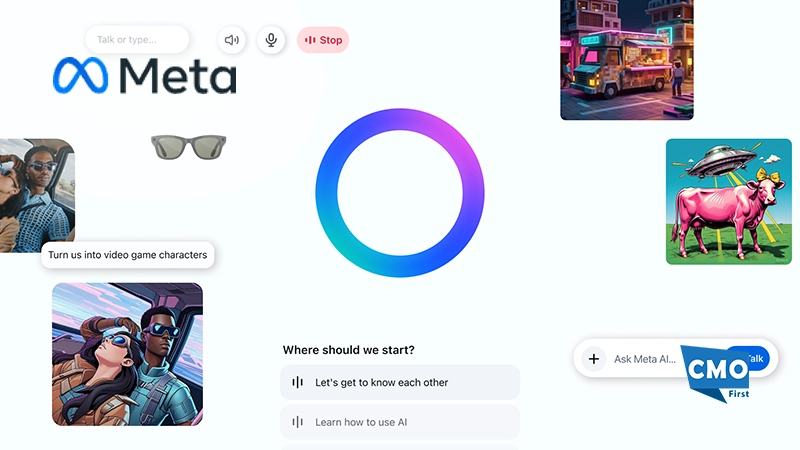

We’re launching a new Meta AI app built with Llama 4, a first step toward building a more personal AI. People around the world use Meta AI daily across WhatsApp, Instagram, Facebook and Messenger. And now, people can choose to experience a personal AI designed around voice conversations with Meta AI inside a standalone app. This release is the first version, and we’re excited to get this in people’s hands and gather their feedback.

Meta AI is built to get to know you, so its answers are more helpful. It’s easy to talk to, so it’s more seamless and natural to interact with. It’s more social, so it can show you things from the people and places you care about. And you can use Meta AI’s voice features while multitasking and doing other things on your device, with a visible icon to let you know when the microphone is in use.

Also Read: Calabrio Unveiling Record Number of AI-driven Features to Accelerate Contact Center Efficiency and Customer Service Satisfaction

Hey Meta, Let’s Chat

While speaking with AI using your voice isn’t new, we’ve improved our underlying model with Llama 4 to bring you responses that feel more personal and relevant, and more conversational in tone. And the app integrates with other Meta AI features like image generation and editing, which can now all be done through a voice or text conversation with your AI assistant.

We’ve also included a voice demo built with full-duplex speech technology, that you can toggle on and off to test. This technology will deliver a more natural voice experience trained on conversational dialogue, so the AI is generating voice directly instead of reading written responses. It doesn’t have access to the web or real-time information, but we wanted to provide a glimpse into the future by letting people experiment with this. You may encounter technical issues or inconsistencies so we’ll continue to gather feedback to help us improve the experience over time.

SOURCE: Meta

Leave a Reply